The technical interview rethought: an approach to evaluate communication and code comprehension

Software development productivity and quality of work is notoriously hard to measure. This complicates hiring decisions as there isn't an easy way to evaluate a developer's true skill set. Consequently, many companies fall back on proxies in an attempt to assess competency. One popular proxy is the dreaded white board coding exercise.

At CodeScene, we decided to avoid white board coding tasks during our hiring process. White board coding exercises simply lack what behavioral scientists would call ecological validity; the white board represents a setting that doesn't reflect the actual work. The candidates are being evaluated on factors that aren't relevant nor representative of your every day tasks. Worse, there's an elevated risk that you miss out on a good candidate for the wrong reasons. In this article we share our alternative approach that avoids these problems.

White board coding exercises lack ecological validity

I've been through white board coding exercises myself earlier in my career. I happen to perform reasonably well in that setting, yet I never found the exercise valuable. More specifically, white board coding exercises fail to evaluate what truly matters if you want to build an elite development team. Here's the background to support that statement:

- Code is read much more frequently than it's written.

- We spend 60% of our time trying to understand existing code.

This means that, as a developer, I will spend more time reading and trying to comprehend existing code than actually, well, coding. Adding to that we also have the social aspect:

- Can I take part in a technical conversation to explain an existing solution and motivate the trade-offs?

- How do I react to feedback, design suggestions, and well-meaning criticism?

- How attached do I become to "my" code and my prior decisions?

I could still be a greater programmer but if I'm performing poorly on these social aspects, then I'm unlikely to function well in a team with a common goal.

Evaluating what matters

The safest way to hire is via your network. There's no substitute for having worked closely with someone on a real project with real constraints and real users. But every so often we need to evaluate potential hires without having the benefit of shared work history.

One approach that has worked well for us is to let the potential hire run a GitHub project of their choice through the free version of CodeScene, preferably their own open source project. Earlier in the recruitment process, we have already vetted our candidates by scanning their open source code, so we already know that they write high-quality code. During the technical interview, two of our tech leads then pair up with the candidate and do a walkthrough of the results. This offers several advantages and a very different dynamic compared to a contrived white board exercise:

- Less stress: being evaluated at a white board can be stressful. A candidate's personal open source is a much better real-world representation of their coding skills. It's code written without artificial time pressure.

- Relevance: there's no substitute to eating your own dog food. You immediately get a sense for our product -- the one you could potentially work on -- and can relate its analysis to code that you are already intimately familiar with.

Over the past year I have found that the best candidates have already run CodeScene on their own open source before we even ask them to do so. It's only fair: just like you as an employer evaluates a candidate, we need to understand that we -- as a company -- are being evaluated too. The hiring process should be bi-directional, and good developers have plenty of job options these days. As a company, you need to offer something exciting and relevant.

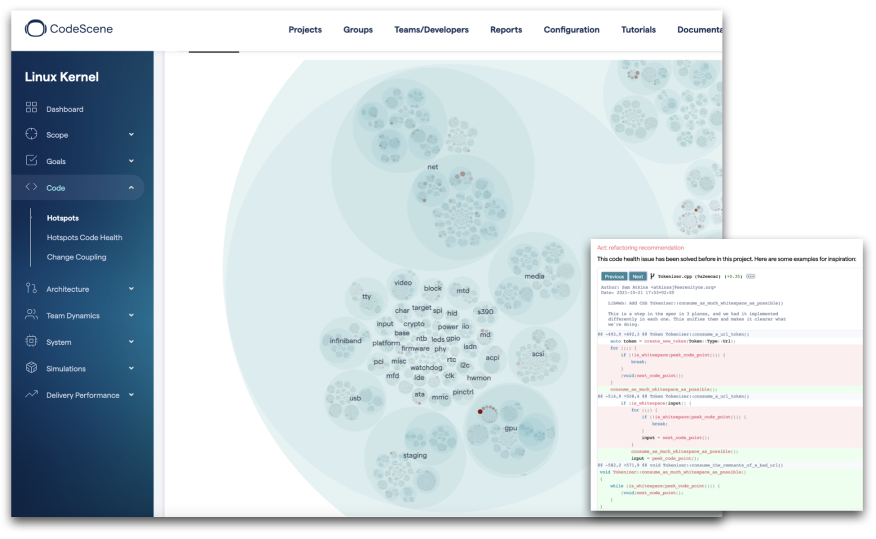

An example of a CodeScene walkthrough of the Linux Kernel, complete with auto-generated refactoring examples.

Now, during the interview we let CodeScene point us to the weaker parts of the codebase. Maybe there's this brain class that you never cared to refactor, perhaps you crammed a bit too much complexity into a specific method, or you might have chosen the wrong abstractions and ended up with tight change coupling between your classes. A perfect codebase is as rare as the yeti, perhaps even more so.

This is where the conversation gets interesting. By pointing out the feedback from the tool, we give the candidate a chance to interpret and comment on the results. Most people are aware of the problems or take it as a learning opportunity. These are the signals you look for. Be careful when you spot defensive behavior.

Finally, we ask the candidate how they would refactor the code, given the issues we just discussed. At this point we typically offer our own suggestions, just like we would during a code review. With the best candidates, you quickly find yourself in a rewarding conversation where you forget that you're running a technical interview. There's much to be excited about when it comes to software design, and the people we look for are people eager to learn. As a bonus, our new hire has gotten a head start and is already familiar with our main product as a user; the on-boarding started during the hiring process.