qweqweqweqweqweweqweqweqwewqewqeqwewqeqweqweqweqweqweqwewqeqweqewqew toto1

While onboarding a few on-prem customers we faced issues with slow analyses. CodeScene is usually pretty fast (running Linux analysis in ~40 minutes on a Macbook Pro laptop with a solid SSD disk) but two customers were trying to analyze their big repositories and their analyses were running for more than one day.

There were three distinct cases:

-

One customer running CodeScene in docker inside a Linux virtual machine hosted on Windows Server 2019;

-

The same customer later switching to Tomcat deployment directly on the Windows Server host;

-

Another customer using our docker image and deploying CodeScene with Azure Containers.

Windows Server - Linux VM and JVM bug

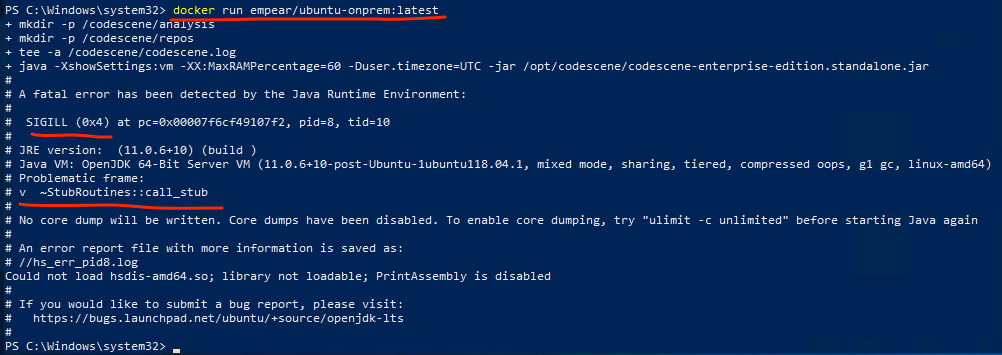

First, they tried to run CodeScene using our docker image and hit a JVM bug right from the start:

SIGILL JVM error

As my colleague found, this was due to incorrect detection of AVX instruction family support (vectorized processor instructions) in the JVM. There’s an open issue for that: https://bugs.openjdk.java.net/browse/JDK-8238596.

Once we identified the root cause the workaround was relatively easy - don’t use AVX:

docker run -e JAVA_OPTIONS=-XX:UseAVX=0 empear/ubuntu-onprem:latestlscpu - just check the FLags section if there’s 'avx' or not:

lscpu - missing AVX flag

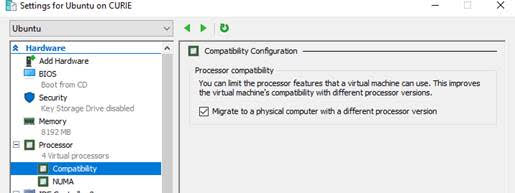

As we later found, the AVX instruction wasn’t supported due to "Compatibility Configuration" on the Windows Server host which allowed the VMs to be easily migrated between physical hosts:

Windows Server - VM compability configuration

The customer ended up using Tomcat installed directly on the Host Windows OS, but it was a really tricky support case anyway.

Windows Server - Tomcat and small heap size

The customer decided to switch from Docker to Tomcat deployment but the analysis was still very slow. We thought it could be a slow IO issue again, because of dealing with another customer’s installation at the same time (see Azure Containers section below). But It turned out they were using really fast SAN (Storage Area Network) disk storage.

Eventually, we found the root cause: small default heap size set by Tomcat: although the machine had 32 GB of ram, the default Max heap size set by Tomcat Windows installer was only about ~250 MB. After raising the max heap size manually to 12 GB the analysis finished within a hour (they have a huge repository):

tomcat heap settings on Windows

Azure Containers - (really) slow IO

Another painful experience with slow shared file storage on Azure. A customer analyzing a huge repository couldn’t get the results even after a few days! The problem is still being investigated, but we believe the issue is shared file storage used by Azure Containers (and also Azure App Service).

CodeScene is an IO intensive application and needs a fast disk. Thus any kind of distributed file system makes it very sad. For these reasons we don’t recommend using Azure Files, AWS EFS et al I ran CodeScene via Azure App Service the last year and found it at least 10x slower on medium-sized repositories compared to a deployment on a plain Linux VM.

Read my colleague Emil's blog post about our latest refactoring recommendations feature